多层感知机

%matplotlib inline

import torch

import numpy as np

import matplotlib.pylab as plt

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

print(torch.__version__)

1.11.0+cu113

激活函数

def xyplot(x_vals, y_vals, name):

d2l.set_figsize(figsize=(5, 2.5))

d2l.plt.plot(x_vals.detach().numpy(), y_vals.detach().numpy())

d2l.plt.xlabel('x')

d2l.plt.ylabel(name + '(x)')

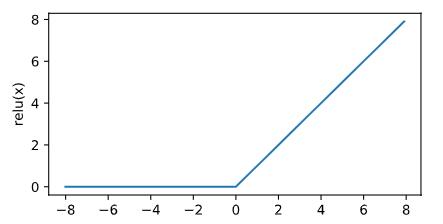

ReLU函数

x = torch.arange(-8.0, 8.0, 0.1, requires_grad=True)

y = x.relu()

xyplot(x, y, 'relu')

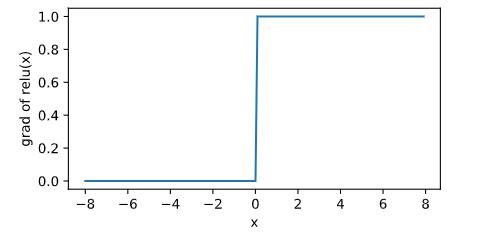

y.sum().backward()

xyplot(x, x.grad, 'grad of relu')

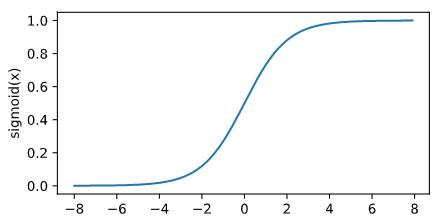

sigmoid函数

y = x.sigmoid()

xyplot(x, y, 'sigmoid')

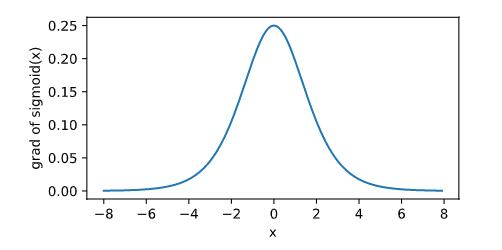

x.grad.zero_()

y.sum().backward()

xyplot(x, x.grad, 'grad of sigmoid')

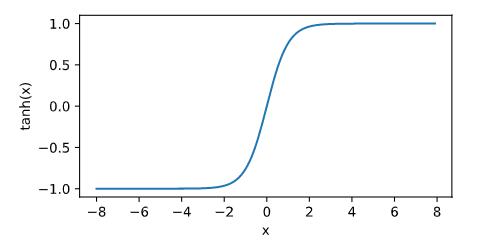

tanh函数

y = x.tanh()

xyplot(x, y, 'tanh')

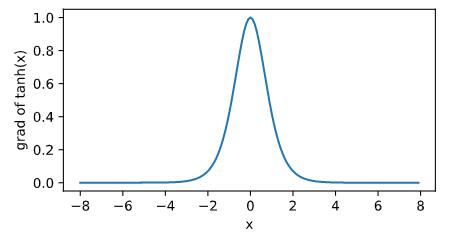

x.grad.zero_()

y.sum().backward()

xyplot(x, x.grad, 'grad of tanh')

1.多层感知机的从零开始实现

import torch

import numpy as np

import sys

sys.path.append("..") # 为了导入上层目录的d2lzh_pytorch

import d2lzh_pytorch as d2l

print(torch.__version__)

1.11.0+cu113

获取和读取数据

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

定义模型参数

num_inputs, num_outputs, num_hiddens = 784, 10, 256

W1 = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_hiddens)), dtype=torch.float)

b1 = torch.zeros(num_hiddens, dtype=torch.float)

W2 = torch.tensor(np.random.normal(0, 0.01, (num_hiddens, num_outputs)), dtype=torch.float)

b2 = torch.zeros(num_outputs, dtype=torch.float)

params = [W1, b1, W2, b2]

for param in params:

param.requires_grad_(requires_grad=True)

定义激活函数

def relu(X):

return torch.max(input=X, other=torch.tensor(0.0))

定义模型

def net(X):

X = X.view((-1, num_inputs))

H = relu(torch.matmul(X, W1) + b1)

return torch.matmul(H, W2) + b2

定义损失函数

loss = torch.nn.CrossEntropyLoss()

训练模型

num_epochs, lr = 5, 100.0

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, params, lr)

epoch 1, loss 0.0030, train acc 0.714, test acc 0.753 epoch 2, loss 0.0019, train acc 0.821, test acc 0.777 epoch 3, loss 0.0017, train acc 0.842, test acc 0.834 epoch 4, loss 0.0015, train acc 0.857, test acc 0.839 epoch 5, loss 0.0014, train acc 0.865, test acc 0.845

2.多层感知机的简洁实现

import torch

from torch import nn

from torch.nn import init

import numpy as np

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

print(torch.__version__)

1.11.0+cu113

定义模型

num_inputs, num_outputs, num_hiddens = 784, 10, 256

net = nn.Sequential(

d2l.FlattenLayer(),

nn.Linear(num_inputs, num_hiddens),

nn.ReLU(),

nn.Linear(num_hiddens, num_outputs),

)

for params in net.parameters():

init.normal_(params, mean=0, std=0.01)

读取数据并训练模型

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

loss = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(net.parameters(), lr=0.5)

num_epochs = 5

d2l.train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, None, None, optimizer)

epoch 1, loss 0.0031, train acc 0.703, test acc 0.757 epoch 2, loss 0.0019, train acc 0.824, test acc 0.822 epoch 3, loss 0.0016, train acc 0.845, test acc 0.825 epoch 4, loss 0.0015, train acc 0.855, test acc 0.811 epoch 5, loss 0.0014, train acc 0.865, test acc 0.846

Comments NOTHING